Unreal AI-powered person detection

🔥YOLO🔥 in a Different Way

Here at y'all agency, we're always looking for new ways to create subtle — yet, awe-inspiring — interactions with technology and art.

For a recent conference that took place in Chicago, we used Unreal Engine to create a kidney-shaped homage to the city's iconic "Bean." We placed two cameras within a giant video display, capturing a stereo image of the attendees and projecting that onto a reflective kidney within a cityscape. Attendees even took selfies in front of it, just like the tourists at Millennium Park! This was fun, but it was only the start. We wanted to take the idea a step further and make it even more interactive. But we didn't want to complicate things and clutter the booth with sensors, which also give away how the magic works.

How do you make a 24' screen interactive without a ton of sensors or extra hardware?

To do this, we needed real-time person detection and tracking. Prior to the AI revolution, this would have been done using arrays of motion detectors, like the kind you might see attached to flood lights on a house, that rely on ultrasonic waves or lasers. They're big, so you have to find someplace to put them. They're not so accurate. And having additional components makes setup, development and testing that much more complicated. Not to mention the cost. Being we already have cameras going into a computer, what if the computer could "see" the people and let us know where they were, like motion detectors would?

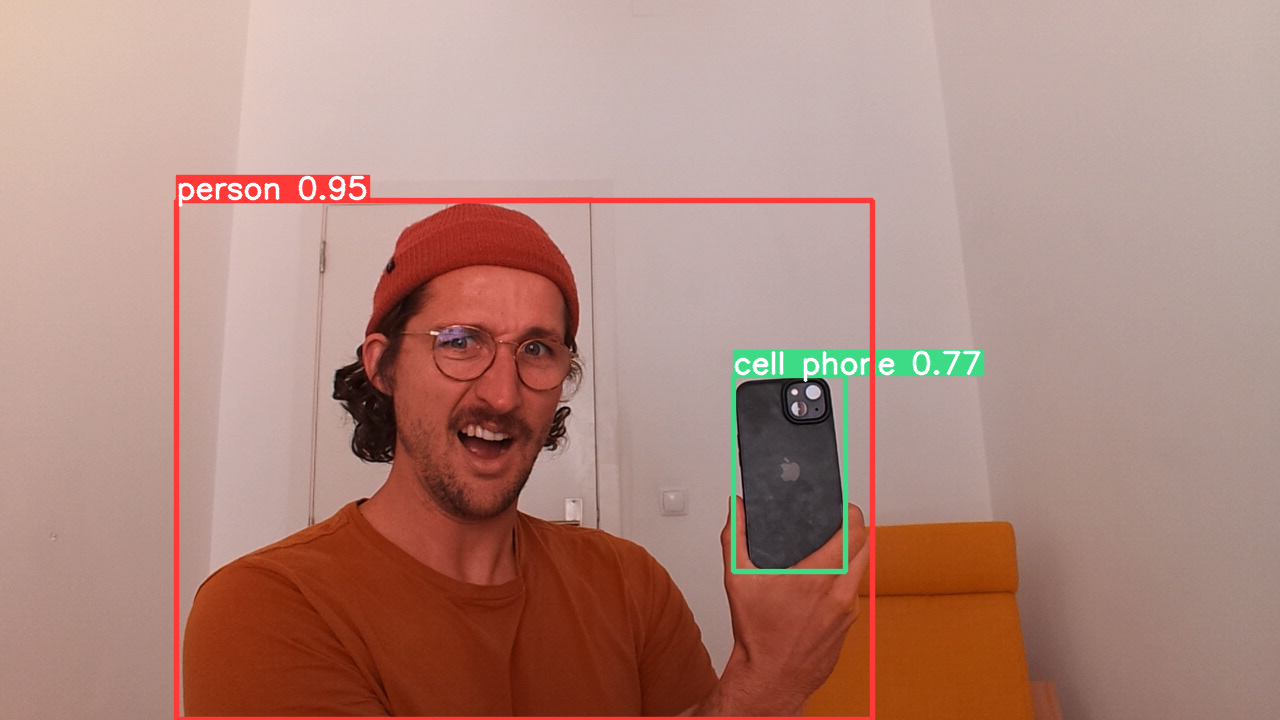

This is a field of research called "computer vision," where AI and algorithms try to figure out what's going on using a video or image. This is not easy! Scouring the internet, we found a few suitable machine learning algorithms but one stood out. The model goes by the name of YOLO (You Only Look Once 🥁🐍) and its combination of speed and robustness stood head and shoulders above the other techniques we found. Alongside its detection of human beings, it could even detect a myriad of other objects — including phones, remotes, cars, chairs, you name it!

Our initial prototypes ran video from our cameras' NDI streams into YOLO, which processed each frame, and then either calculated how large the person was in the frame, using the bounding box area (above, in red), or figured out the position of the person by getting the center of the bounding box. This allowed us to distinguish when a person stopped in front of the exhibit, versus when they just walked by. Knowing their position allowed us to show them content wherever they were along the 24-foot long screen.

We refined our technique into a standalone python app, which would turn video into a set of anonymous numbers, and send this data into Unreal via OSC. Thereby allowing us to control any 3D elements with people's movement! And because the video itself is not saved or further transmitted, it's also both private and super fast as well:

Where the virtual world meets the real world... and not in a weird sci-fi way.

What used to be reserved for specialists and researchers is now our state-of-the-art. AI is replacing hardware, resulting in greater creative possibilities and more efficient installations. Unreal Engine lets us leverage some of the best technology of the video game industry, with which we can create fast, beautiful interactive worlds. When you put these two together with a bit of creativity, we believe it's the next step in making awe-inspiring interactive experiences for physical spaces.